On December 6, 2024, the Reserve Bank of India (RBI) announced the establishment of a high-level committee to chart a course for responsible innovation in artificial intelligence within the financial sector. The committee was tasked with developing a Framework for Responsible and Ethical Enablement of Artificial Intelligence (FREE-AI). It was chaired by Dr. Pushpak Bhattacharya, Professor, Department of Computer Science and Engineering, IIT Bombay, a leading voice in natural language processing and AI ethics.

Nearly a year of consultations, surveys, and research culminated in the August 2025 report, which outlines a comprehensive blueprint for AI adoption in India’s financial ecosystem. This report does not limit itself to technicalities; rather, it provides a normative framework that integrates innovation with accountability, structured around six strategic pillars and 26 actionable recommendations.

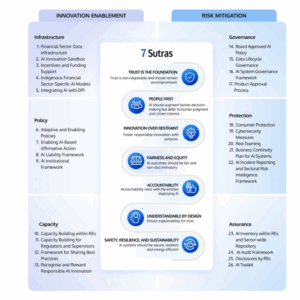

At the heart of this blueprint lie the Seven Sutras, guiding principles that the RBI envisions as the moral and operational compass for the sector;

- Trust is the foundation

- People first

- Innovation over restraint

- Fairness and equity

- Accountability

- Understandable by design

- Safety, resilience, and sustainability

This picture is a part of the “free AI” report published by RBI in August 2025

This picture is a part of the “free AI” report published by RBI in August 2025

SIGNIFICANCE OF THE REPORT

The FREE-AI report serves as India’s answer to the rising adoption of machine learning in lending, fraud prevention, customer engagement, collections, and market surveillance. The apex bank underscores that innovation must be scaffolded by resilient governance: data infrastructure for indigenous models, capacity-building, clear policies, and robust controls for privacy, safety, and assurance.

Notably, the report is not a theory alone. It consolidates evidence through two sectoral surveys and consultations to understand where banks/NBFCs stand today and what is blocking responsible scaling.

THE SUTRAS, AS LEGAL STANDARDS (NOT JUST SLOGANS)

- Trust & People First: In financial law, “trust” is not marketing, it’s enforceable through consumer protection, fair dealing, and fiduciary duties embedded across banking norms. The sutra effectively calls for legibility and reliability as legal outcomes: disclosures customers can understand, recourse pathways that work, and controls that keep models from drifting into harmful or deceptive behaviour. The RBI’s framing invites duty-of-care style obligations for Boards and management when deploying AI in “high-impact” use cases;

- Innovation over Restraint: The committee is explicit that caution must not calcify into prohibition. This is where the report’s innovation-side pillars matter: building data and compute infrastructure for India, fostering indigenous AI models, and standing up a multi-stakeholder committee to monitor opportunities and risks over time. These recommendations anticipate the classic “law-and-innovation” tension by creating pre-market and market-adjacent spaces for experimentation (e.g., sandboxes), reducing the need to blunt innovation through blanket bans;

- Fairness & Equity: Biased AI in credit or collections can engage Articles 14 and 21 values and collide with sectoral fair-lending norms. “Equity” pushes industry beyond box-ticking discrimination checks toward bias testing, monitoring, and remediation baked into model governance, especially where proxies for protected attributes sneak in. The sutra foreshadows impact assessment obligations akin to global “AI risk” regimes;

- Accountability: The report anticipates the hardest legal question: who is liable when AI harms, the regulated entity, the vendor, or both? Expect the RBI to evolve a shared-liability posture aligned with outsourcing and third-party risk norms, with institutions retaining ultimate accountability for outcomes that touch customers and markets, regardless of whether a model is in-house or procured. The recommendations’ governance/protection pillars leave room for model auditability, incident reporting, and traceability as enforceable expectations.

- Understandable by Design: This land-bridges technical explainability (“why did the model do X?”) with legal intelligibility (“can a customer, reviewer, or court make sense of the decision?”). In practice, that means using interpretable architectures where stakes are high, and creating documentation, challenger models, and reason codes that regulators and consumers can meaningfully review ;

- Safety, Resilience, Sustainability: This sutra stretches “safety” beyond cybersecurity into model robustness, adversarial resilience, and systemic risk. Think: volatility-sensitive trading models, fraud-detection overfitting, or LLMs hallucinating in collections workflows. “Sustainability” is not just environmental; it also means operational sustainability: models that can be maintained and governed over time.

RBI’S VISION FOR AI GOVERNANCE IN INDIA

Three design moves stand out:

- Six Pillars, 26 Recommendations: The architecture deliberately splits “enablement” (Infrastructure/Policy/Capacity) from “control” (Governance/Protection/Assurance), making it easier to sequence compliance: first build capabilities and shared rails; then turn the compliance screws where necessary. Reuters’ synthesis highlights call for data infrastructure, indigenous models, standing committee oversight, and integration with India’s DPI rails (e.g., UPI), all with risk controls calibrated to use-case criticality.

- Tolerant supervision once: The committee urges a lenient stance for first-time AI errors where safety mechanisms exist, so that fear of penalties does not freeze innovation. In legal terms, this sounds like responsive regulation: proportional enforcement calibrated to good-faith efforts and the presence of safeguards. It is not impunity; it is a safe but finite learning zone.

- Multi-stakeholder oversight: The proposed standing committee hints at a hub-and-spoke model, regulator at the hub, with industry, academia, and civil society contributing to horizon-scanning, standards, and playbooks. This makes sense for a domain where tech capabilities and risks evolve faster than static rules.

IMPLICATIONS FOR FUTURE POLICIES

- Privacy & Data Protection: AI’s hunger for data meets India’s Digital Personal Data Protection Act, 2023. Sectoral supervisors like RBI can and likely will raise the floor for financial data handling (e.g., purpose limitations, minimisation, security safeguards), while clarifying expectations for profiling, consent in digital journeys, vendor access, and cross-border flows in financial services. FREE-AI nudges institutions to convert DPDP compliance into model-lifecycle discipline starting from data sourcing and feature engineering to deployment and monitoring. (General legal analysis; the report frames protection/assurance pillars without re-stating the statute.)

- Fair lending & consumer law: Expect ex-ante fairness testing and ex-post explainability to become standard in retail credit, underwriting, and collections. If the RBI couples this with mandatory adverse-action notices and appeal/recourse protocols, India would be aligning with global best practice while grounding it in constitutional equality norms.

- Third-party & vendor liability: Financial-sector precedent already places ultimate accountability on the regulated entity for outsourced functions. The AI twist will be contractual back-to-back obligations on developers: audit rights, data/use restrictions, IP/indemnities for model risks, and cooperation duties for incident response and supervisory queries, especially where models are frontier or foundation-model-based. FREE-AI’s governance/assurance pillars are compatible with that arc.

A PRACTICAL COMPLIANCE ROADMAP FOR RES (BANKS/NBFCS/FINTECH PARTNERS)

- Board-approved AI policy that maps the Seven Sutras to internal standards for model risk, data governance, explainability, and human-in-the-loop thresholds.

- Model inventory and risk classification, tying controls (validation frequency, challenger models, kill-switches) to use-case criticality.

- Bias and performance testing regimen with pre-deployment impact assessments and post-deployment drift monitoring; recordkeeping to a supervisory audit standard.

- Customer-facing transparency: meaningful disclosures when AI is materially involved in decisions, accessible recourse, and human review where rights or access to finance are at stake.

- Vendor governance: contracts that embed supervision-readiness (logs, documentation), auditability, and incident-response cooperation; periodic independent assurance where warranted.

- Innovation channels: participation in sandboxes or controlled pilots, particularly for DPI-integrated use cases (e.g., UPI-adjacent fraud analytics), so learning is captured without system-wide risk.

CONCLUSION

The RBI’s FREE-AI report is exhaustive, timely, and forward-looking. By blending ethical values, legal principles, and practical recommendations, it represents a constitutional and regulatory vision for AI in Indian finance. The comparative analysis underscores that India cannot remain insulated; it must harmonize with global best practices while tailoring them to its developmental priorities.

The Seven Sutras offer a uniquely Indian articulation of responsible AI which is anchored in trust, fairness, and inclusion, yet mindful of innovation and systemic resilience. What remains to be seen is how the RBI and the broader policy ecosystem will translate these sutras into binding rules, supervisory tools, and industry standards.

If done well, India can strike the delicate balance this report aspires to: fostering responsible innovation while safeguarding the values of accountability, fairness, and trust. In that sense, the Seven Sutras are not just principles; they may well become the legal and ethical DNA of AI in India’s financial future.

Find the report linked here