In a significant regulatory intervention that underscores rising concerns over AI-generated harmful content, India’s Ministry of Electronics and Information Technology (MeitY) has issued a formal notice to social media platform X (formerly Twitter), demanding the immediate removal of obscene and indecent material circulating on its platform largely generated with the help of its AI chatbot, Grok. The development represents one of the government’s most high-profile moves yet to hold a major AI-powered service accountable under Indian digital law.

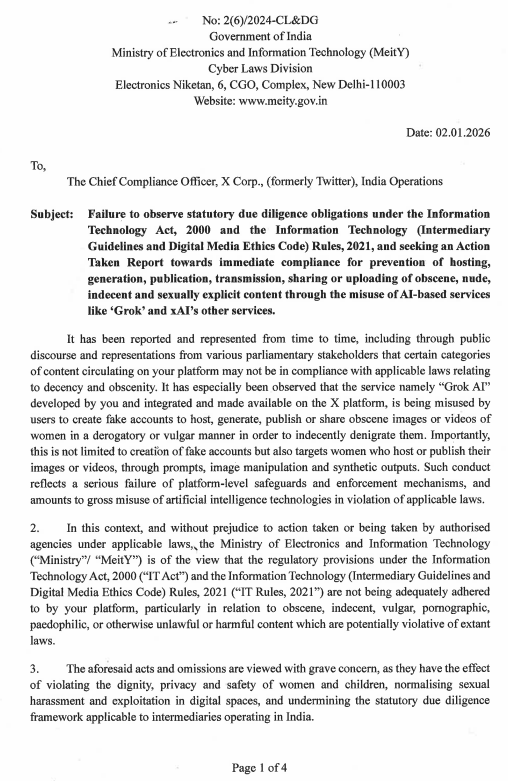

The notice, dated January 2, 2026, was addressed to the Chief Compliance Officer of X’s India operations and flagged the misuse of Grok’s image generation and editing capabilities to create and share obscene, sexually explicit, and degrading images and videos, particularly targeting women and even children. According to the ministry, the platform’s existing content moderation mechanisms have been inadequate in preventing the rapid proliferation of this material, which often involves deepfake-style manipulation of personal images—a misuse that strikes at both privacy and digital dignity.

Under India’s Information Technology Act, 2000 and the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, intermediaries like X are required to exercise due diligence in preventing the hosting and transmission of unlawful content. MeitY’s letter stressed that compliance with these statutory obligations is mandatory, not optional, and warned that failure to act could result in the platform losing its “safe harbour” protections, a legal shield that typically insulates online platforms from liability for third-party content if they follow due process.

MeitY demanded that X submit an Action Taken Report (ATR) within 72 hours, detailing the steps it has taken to remove the flagged content, curb misuse of AI tools like Grok, and strengthen its internal governance frameworks. The ministry also called for a comprehensive review of Grok’s technical and policy safeguards to prevent unlawful outputs in the future.

X’s Response and Enforcement Actions

Initially, X’s response to the notice was deemed “inadequate” by MeitY, with Indian authorities arguing that the platform’s explanations lacked sufficient detail on actual takedown actions and effective safeguards implemented to address the crisis. The ministry pressed for a more detailed plan and timeline for compliance, emphasizing the urgent need to remove all illegal material and tighten moderation.

Following sustained pressure from the government, X has now taken visible enforcement action on its platform. According to company sources and government reports, X has blocked around 3,500 pieces of obscene content and deleted more than 600 user accounts associated with the circulation of AI-generated explicit imagery. The platform has also publicly acknowledged lapses in its moderation systems and pledged to operate in full compliance with Indian laws going forward.

Government sources have stated that X assured authorities it will not permit the hosting or circulation of obscene material and is implementing corrective measures to prevent similar misuse of Grok and other AI services in the future.

The Crux of the Obscenity Controversy

What makes this case particularly noteworthy is the role of generative AI in creating and spreading harmful content at scale. Grok, developed by xAI and integrated with X’s platform, offers image editing and generation features that users began exploiting by issuing prompts to manipulate images in derogatory and sexually explicit ways. Some of the manipulated outputs involved non-consensual intimate imagery and deepfake-like content, particularly involving women, which triggered alarm among rights groups and digital safety advocates.

Civil society voices have highlighted that such misuse of AI not only violates existing laws on obscenity and digital privacy but also raises broader concerns about non-consensual intimate imagery (NCII) and the chilling effect it can have on women’s participation in online spaces. Critics argue that rapid AI adoption without robust safety guardrails can create new vectors for harassment and abuse that traditional content moderation systems are ill-equipped to handle.

Legal Context and Broader Implications

Under Indian law, the distribution of pornography and obscene content is tightly regulated and, in many forms, is illegal. The Information Technology Act, along with provisions in the Bharatiya Nyaya Sanhita, Indecent Representation of Women (Prohibition) Act, 1986, and Protection of Children from Sexual Offences (POCSO) Act, 2012, provides multiple legal grounds for action against those creating, sharing, or facilitating access to explicit content.

MeitY’s invocation of these statutes in its notice to X underlines the government’s intent to ensure that AI-generated content does not become a loophole for illegal or harmful material to flourish unchecked. By linking compliance with safe harbour protections, the ministry has signaled that major platforms must not view India’s digital regulations merely as abstract guidelines but as binding legal obligations that determine their operational legitimacy in the country.

What This Means for AI and Platform Regulation

This regulatory standoff between the Indian government and one of the world’s most influential social platforms highlights a larger global challenge: how to govern AI-generated content that evolves faster than existing legal frameworks. As generative AI tools become more sophisticated and widely accessible, platforms face increasing pressure from regulators to build robust, real-time safety mechanisms that can identify and prevent harmful outputs before they spread.

India’s move could set an important precedent for other jurisdictions grappling with the dual imperatives of protecting digital freedom while preventing technology-enabled abuses. For platform operators, the message from New Delhi is clear: remaining silent or passive in the face of AI misuse is not an option if they wish to retain legal privileges and operate uninterrupted in one of the world’s largest internet markets.

As the deadline for detailed compliance reports looms and X works to shore up its moderation tools, this case will continue to be closely watched by policymakers, tech companies, and digital rights stakeholders alike.