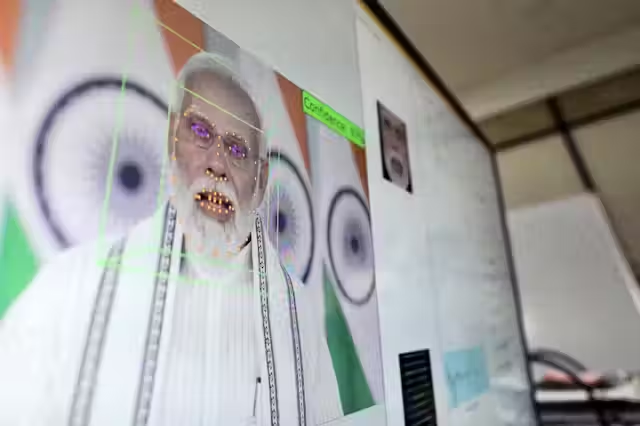

In recent years, the rise of artificial intelligence (AI) has revolutionized content creation, enabling the generation of hyper-realistic images, videos, and articles at unprecedented speed. But along with this progress comes a dark side: deepfakes, misinformation, and fake news are spreading faster than ever, blurring the line between reality and fabrication. Just weeks ago, the entertainment industry was rattled by the controversy surrounding AI-generated fake images of Aishwarya Rai, raising serious concerns about misuse of technology to manipulate public perception and damage personal reputations.

Amid growing fears over the societal impact of such technology, India’s Parliamentary Standing Committee on Communications and Information Technology has stepped forward with a set of recommendations aimed at regulating the murky world of AI-generated content. The committee has suggested that AI content creators should be subject to licensing requirements and mandatory labelling of their content to curb the rising tide of fake news and harmful deepfakes.

Proposed Measures to Regulate AI Content Creators

The committee’s report, chaired by BJP MP Nishikant Dubey, lays out two key proposals designed to boost transparency and accountability in the AI-driven content landscape:

- Licensing for AI Content Creators

The panel suggests that all individuals or organizations creating AI-generated content whether images, videos, or articles should be required to obtain licenses. This would make creators more accountable for the media they produce, especially in an era where AI-generated content can easily go viral without verification. - Mandatory Labelling of AI-Generated Media

To help the public distinguish between AI-generated and human-created content, the committee recommends a clear labelling requirement. Every piece of AI-generated content should carry a conspicuous label disclosing its origin.

These measures are seen as critical steps to combat the dangerous spread of deepfakes and fake news, which often go unchecked on social media platforms and digital news portals.

Why Now? The Rising Threat of AI Misinformation

India is not alone in grappling with the challenges of AI-generated misinformation. Globally, regulators are realizing that unchecked AI media creation poses serious risks to democracy, individual privacy, and social cohesion. However, in India, the issue has gained particular urgency after several high-profile incidents of deepfake videos and manipulated images surfaced, sparking public outrage.

The Aishwarya Rai incident was especially significant. Fake images of the renowned actress circulated widely on social media, highlighting how advanced AI tools can be weaponized to defame public figures or spread misinformation without any accountability.

The committee’s recommendations come against this worrying backdrop, aiming to create a regulatory framework that will help safeguard citizens against deceptive digital content.

Proposed Institutional Measures to Back the Rules

To ensure these suggestions are not just theoretical, the committee has proposed concrete institutional steps:

- Inter-Ministerial Coordination

The Ministry of Electronics and Information Technology (MeitY) and the Ministry of Information and Broadcasting (MIB) should collaborate to frame comprehensive legal and technological mechanisms for detecting and penalizing the creation and spread of AI-generated fake news. - Fact-Checking and Ombudsman System

Media organizations should be encouraged to adopt strong fact-checking mechanisms and appoint independent ombudsmen tasked with ensuring the accuracy of content published online.

While these remain recommendations at this stage, they carry significant weight and are likely to influence policy discussions at the highest levels.

Challenges Ahead

While the committee’s suggestions are a step in the right direction, several challenges will need careful consideration:

- Implementation Complexity

Defining the scope of what qualifies as AI-generated content is not straightforward. AI-generated content can be edited or modified further, making detection and labelling enforcement difficult. - Innovation vs Regulation Balance

Overly strict regulations could stifle innovation in AI development and limit its potential positive applications, such as in creative industries, research, and education. - Ensuring Fairness

Small creators and startups may find licensing costly or burdensome, creating barriers to entry that favor large corporations.

Addressing these issues will require a nuanced, multi-stakeholder approach involving government agencies, technology companies, legal experts, and civil society.

Conclusion: Toward Responsible AI Content Creation

As deepfake incidents and AI-generated misinformation become more prevalent, India’s parliamentary panel’s proposal to license and label AI content creators stands out as a pragmatic and urgent response. It is an attempt to foster a digital ecosystem where transparency, accountability, and ethical standards are not afterthoughts, but guiding principles.

This is not just a matter of regulating technology, it is about preserving public trust, individual dignity, and democratic discourse in the digital age. As the debate unfolds, the spotlight will remain on how India can craft a balanced regulatory framework that both controls the risks and unleashes the potential of artificial intelligence.