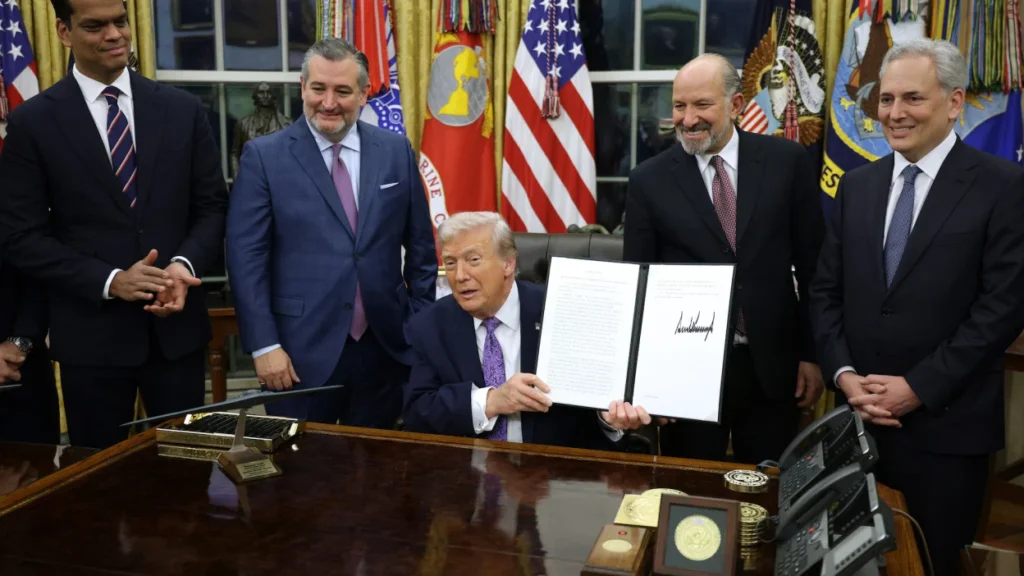

On 11th December, 2025, as cameras rolled in the White House, President Donald Trump signed an executive order that would quietly redraw the balance of power over how artificial intelligence is regulated in the United States. Unlike earlier AI announcements framed around innovation or safety, this one was about something more structural: who gets to make the rules.

The order directs federal agencies to push back against state-level artificial intelligence laws that the administration views as obstacles to a unified national AI policy. Within hours, it triggered sharp reactions from state officials, legal scholars, civil society groups, and technology companies, setting the stage for what could become one of the most consequential federal–state conflicts over emerging technology governance.

What the Order Seeks to Do?

At its core, the executive order asserts that AI regulation should be centralized at the federal level, warning that a growing patchwork of state laws could undermine U.S. competitiveness and burden companies operating across state lines. The administration argues that inconsistent rules on AI transparency, bias mitigation, and deployment create legal uncertainty and slow innovation.

To enforce this position, the order establishes a new litigation-focused strategy within the federal government. The Department of Justice is instructed to form a task force that will review existing and proposed state AI laws and challenge those deemed incompatible with federal policy or constitutional principles. States identified as creating “regulatory barriers” may find their laws scrutinized and potentially litigated by the federal government.

The order also asks the Department of Commerce to compile a report identifying state AI regulations that could interfere with national objectives. That review is expected to inform further legal and administrative action in the coming months.

Why States Are in the Spotlight?

Over the past two years, states have emerged as the most active lawmakers in the AI space. With Congress slow to pass comprehensive federal legislation, states like California, Colorado, and New York have stepped in with laws addressing algorithmic discrimination, automated decision-making, and AI transparency.

Colorado’s AI Act, in particular, has drawn attention for imposing obligations on developers and deployers of high-risk AI systems to prevent discriminatory outcomes. California lawmakers, meanwhile, have advanced multiple bills aimed at regulating generative AI, workplace surveillance, and automated hiring tools.

The Trump administration’s order signals that this state-led approach may now face federal resistance not because the federal government has enacted a substitute framework, but because it views state action itself as the problem.

The Administration’s Argument

White House officials have framed the order as a necessary step to protect innovation and prevent regulatory fragmentation. In their view, AI development much like aviation or telecommunications requires national coordination, not fifty different regulatory regimes.

The executive order also leans on constitutional arguments, suggesting that state AI laws may interfere with interstate commerce or conflict with existing federal authorities. While the order does not immediately invalidate any state statute, it lays the groundwork for federal challenges that could test these theories in court.

Supporters in parts of the tech industry have welcomed the move, arguing that compliance with multiple state laws is costly and unclear, particularly for companies deploying AI tools nationwide.

Legal and Political Pushback

Critics were quick to respond. Several constitutional scholars have noted that executive orders cannot override state law on their own, especially in areas where Congress has not clearly legislated. Without a comprehensive federal AI statute, the administration’s reliance on litigation and funding leverage may face significant legal hurdles.

State officials have also raised concerns about federal overreach. Governors and attorneys general from states with existing AI laws have warned that the order undermines local democratic processes, particularly where state legislatures acted to protect consumers and civil rights in response to federal inaction.

Civil society groups argue that the order risks sidelining safeguards designed to address real harms such as biased algorithms in housing, employment, and criminal justice without offering an alternative regulatory solution.

Funding Pressure and Federal Leverage

One of the most closely watched aspects of the order is its suggestion that federal funding could be used as leverage. The directive encourages agencies to assess whether states that maintain certain AI regulations should face consequences when applying for federal programs, including infrastructure and broadband funding.

This approach, while not unprecedented, has historically drawn legal challenges when federal conditions are seen as coercive. Legal analysts expect this aspect of the order to be closely examined if states decide to contest the administration’s actions in court.

A Broader Governance Gap

The executive order highlights a deeper issue in U.S. AI governance: the absence of a clear federal framework. While other jurisdictions most notably the European Union have moved forward with binding AI legislation, the U.S. remains reliant on a mix of voluntary guidelines, sector-specific rules, and now, executive action.

By attempting to pause state experimentation without replacing it with federal law, the administration risks widening that governance gap. Even some critics of state-level regulation acknowledge that the lack of congressional action has left states filling a vacuum.

What Happens Next?

In the coming months, federal agencies are expected to begin reviewing state AI laws, and legal challenges on both sides appear likely. States may seek court protection for their regulatory authority, while the federal government may test how far it can go in asserting national control over AI policy without explicit legislative backing.

For AI developers, legal teams, and policymakers, the uncertainty is immediate. Until courts or Congress provide clarity, the rules governing artificial intelligence in the U.S. remain unsettled.

What is clear, however, is that the debate has moved beyond technical standards or ethical principles. The fight over AI regulation is now also a fight over federalism and over who gets to decide how powerful technologies shape everyday life.