A federal judge in San Francisco dismissed a high-profile copyright lawsuit filed by 13 prominent writers including Sarah Silverman, Ta‑Nehisi Coates, and Jacqueline Woodson against Meta Platforms. The complaint accused Meta of copying books from “shadow libraries” to train its LLaMA AI model.

Plaintiffs and Their Allegations

The suit, initiated in 2023, accused Meta of unauthorized mass copying to develop its language model, including works from digital repositories like LibGen without payment or permission. The authors argued this amounted to “historically unprecedented pirating” damaging their livelihoods.

Judicial Ruling: Formal Defeat—but with Caveats

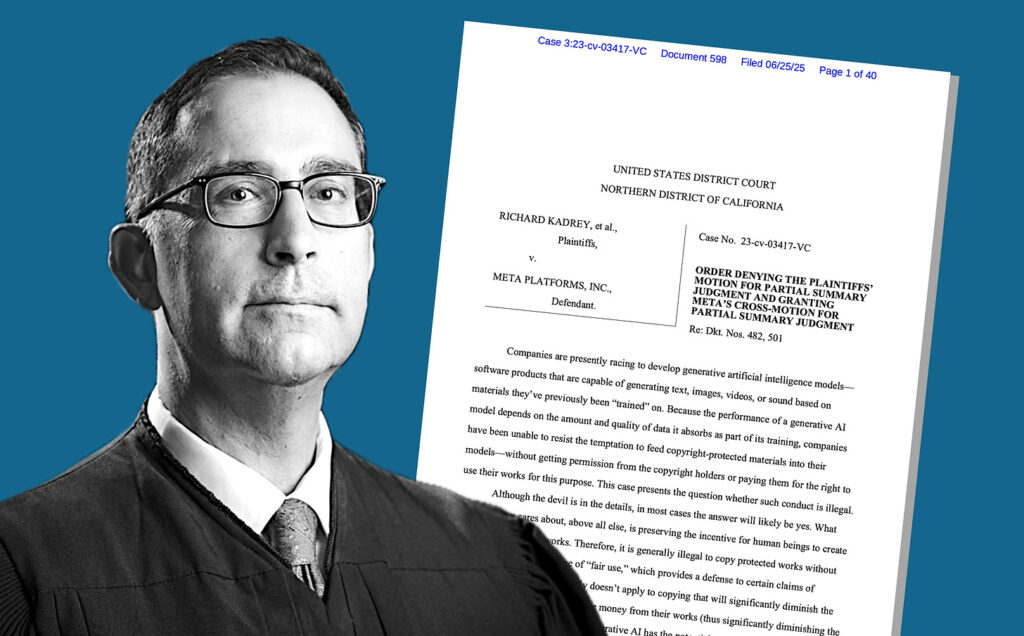

U.S. District Judge Vince Chhabria ruled that the plaintiffs’ arguments were legally insufficient to proceed, but emphasized this did not establish that Meta’s actions were lawful. Rather, the case failed on its legal framing.

“This ruling does not stand for the proposition that Meta’s use of copyrighted materials… is lawful… It stands only for the proposition that these plaintiffs made the wrong arguments.” Judge Chhabria even hinted that future lawsuits could succeed if better argued, noting the limited scope of this decision—it was not a class action.

Fair Use: Meta’s Defense

Meta invoked the U.S. “fair use” doctrine, arguing that replication of works is permissible when used to create a fundamentally new product. They maintained that LLaMA doesn’t reproduce the texts and that no one uses it as a substitute for reading the original works.

Implications for the AI Landscape

This judgment is the second this week in the same courthouse touching on AI copyright. Another judge ruled in Anthropic’s favor but still pointed to potential liability, particularly due to pirated book sourcing. These decisions suggest a divided judiciary on how far “fair use” extends in generative AI training. Judge Chhabria also criticized tech claims that copyright compliance would stifle innovation: “These products … will generate billions, even trillions … If … necessary … they will figure out a way to compensate copyright holders.”

Internal Revelations: LibGen, Warnings, and Zuckerberg

Unsealed court records revealed Meta’s internal debates over using LibGen, with employees expressing concerns about legality. It was later claimed that Mark Zuckerberg personally approved the use of the dataset. These documents cast doubt on Meta’s “innocent intent” narrative and put future public and regulatory scrutiny in sharper focus.

What’s Next?

While this ruling falls short as a legal loss for Meta, it doesn’t resolve the broader debate on AI training practices. The judge’s invitation for better‑constructed suits may embolden more authors to come forward. As AI becomes central to major tech strategies, we’re likely to see more cases testing the boundaries of copyright, transformation, and compensation.

Final Thoughts

- For authors and rights holders: The judgment is not the final word—it spotlights a path for future, more robust legal strategies.

- For AI developers: Fair use remains a defensible strategy, but risk persists if commercial models rely on illegally obtained datasets.

- For regulators: The ruling underscores an urgent need for clearer legislation—potentially setting licensing standards rather than depending on inconsistent court outcomes.

References & Further Reading

- Unsealed court docs show “Zuckerberg approved use of LibGen”.

- Judge Chhabria’s reasoning and dismissal on procedural grounds.

- Read the judgment here